A research team led by Jackson Laboratory (JAX) Associate Professor Vivek Kumar, Ph.D., has developed a non-intrusive method to accurately and continuously measure mouse body mass using computer vision. The approach aims to reduce the stress associated with traditional weighing techniques, improving the quality and reproducibility of biomedical research involving mice.

In both human health and biomedical research, body mass is a critical metric, often serving as an indicator of overall health and a predictor of potential health issues. For researchers working with mice—the most common subjects in preclinical studies—measuring body mass has traditionally involved removing the animals from their cages and placing them on a scale.

This process can be stressful for the mice, introducing variables that can affect the outcome of experiments. Moreover, these measurements are typically taken only once every few days, further complicating the accuracy and reproducibility of data.

"We recognized the need for a better method to accurately and noninvasively measure animal mass over time," said Kumar. "The traditional approach not only stresses the mice but also limits the frequency and reliability of measurements, which can weaken the validity of experimental results."

To address this challenge, Kumar and his team of computational scientists and software engineers, including first author Malachy Guzman, as well as Brian Geuther, M.S. and Gautam Sabnis, Ph.D., turned to computer vision technology. Guzman, a rising senior at Carleton College, was a JAX summer student and continued the project as an academic year intern with the Kumar Lab.

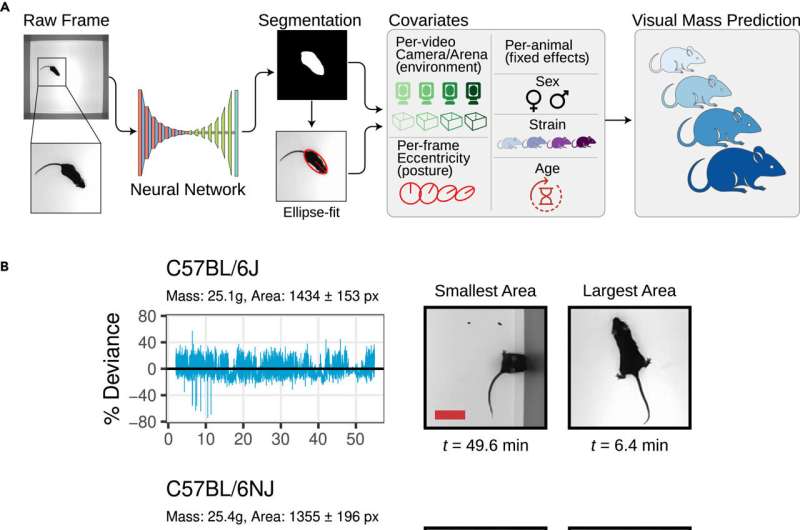

By analyzing one of the largest mouse video datasets used by Kumar previously to also assess grooming behavior and gait posture, they developed a method to calculate body mass with less than 5% error. The findings, published in the Aug 7 advanced online issue of Patterns, offer a new resource for researchers that promises to enhance the quality of a wide range of preclinical studies.

The research team faced several challenges in developing this method. Unlike the relatively static subjects used in industrial farming for body mass measurement, mice are highly active and flexible, frequently changing posture and shape, much like a deformable object.

Additionally, the team worked with 62 different mouse strains, ranging in mass from 13 grams to 45 grams, each with unique sizes, behaviors, and coat colors, necessitating the use of multiple visual metrics, machine learning tools, and statistical modeling to achieve the desired level of accuracy.

"In the video data, only 0.6% of the pixels belonged to each mouse, but we were able to apply computer vision methods to predict the body mass of individual mice," Guzman explained.

"By training our models with genetically diverse mouse strains, we ensured that they could handle the variable visual and size distributions commonly seen in laboratory settings. Ultimately, our statistical models to predict mass can be used to carry out genetic and pharmacological experiments."

This new method offers several key advantages for researchers. It enables the detection of small but significant changes in body mass over multiple days, which could be crucial for studies involving drug or genetic manipulations.

Additionally, the method has the potential to serve as a diagnostic tool for general health monitoring and can be adapted to different experimental environments and other organisms in the future.

More information: Malachy Guzman et al, Highly accurate and precise determination of mouse mass using computer vision, Patterns (2024). DOI: 10.1016/j.patter.2024.101039

Citation: Researchers develop stress-free method to weigh mice using computer vision (2024, August 8) retrieved 8 August 2024 from https://techxplore.com/news/2024-08-stress-free-method-mice-vision.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.