Smart cities are crucial for sustainable urban development as they manage growing populations through advanced sensor networks and digital communication systems. Smart monitoring, a key service in these cities, oversees both indoor and outdoor environments. For example, it can enhance road safety for autonomous vehicles by predicting and alerting them about potential accidents at intersections. Indoors, it supports vulnerable individuals by automatically notifying authorities during emergencies.

Cameras and light detection and ranging (LiDAR) sensors are commonly used for smart monitoring. LiDAR sensors provide three-dimensional (3D) visual information without colors. Since LiDAR relies on laser reflections, a blind spot is left behind objects, making it difficult to detect all areas for monitoring. This can be addressed by building a network of multiple LiDAR sensors installed at various positions, increasing the number of points that can be acquired per frame.

However, this creates another problem: the limited network bandwidth of the LiDAR sensor network cannot support the real-time transmission of data from all the increased points. Previous research has proposed a data selection method that transmits data only from significant regions in 3D space to the server. However, these studies have not detailed how to accurately define and estimate these important regions within the point cloud.

To address this gap, a team of researchers led by masters-course student Kenta Azuma from the Graduate School of Electric Engineering and Computer Science at Shibaura Institute of Technology, developed a new method for accurately estimating important regions in a 3D sensor network.

"In this study, we utilized spatial features, which are created based on multiple spatial metrics, to estimate important regions. The important regions depend on the tasks. For example, for detecting accident-prone spots, the important regions are the spaces where moving objects, such as people and vehicles, are likely to be located. Our method accurately identifies such regions," explains Azuma.

The team also included Professor Ryoichi Shinkuma from Shibaura Institute of Technology, and Koichi Nihei and Takanori Iwai from NEC Corporation's Secure System Platform Research Laboratories. Their study was published in IEEE Sensor Journal on April 1, 2023.

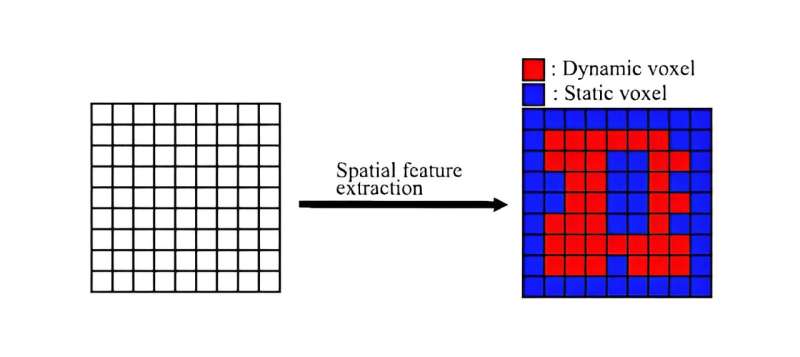

The researchers divided the point cloud into small regions called voxels. The important regions were termed dynamic voxels, representing regions where people pass through. The other regions were called static voxels which encompassed regions with walls and ceilings through which people do not pass.

"To accurately estimate these important regions, it is necessary to use multiple spatial metrics to create spatial features (SFs). We used two types of spatial metrics to create SFs: the temporal metric and the statistical metric," details Azuma. The temporal metric is based on long-term changes in the number of points acquired by the LiDAR sensors over time, while the statistical metric represents the difference in the number of points per frame.

The team further evaluated the accuracy of estimating important regions using these metrics through experiments. They examined point cloud data acquired by multiple LiDAR sensors in three scenarios of people moving indoors and found that dynamic voxels could be identified with up to 10% more accuracy using both metrics compared to using either metric alone.

Additionally, they also employed machine learning to determine the best combination of threshold values that are used for classifying a dynamic voxel using the two metrics. Results revealed that the accuracy obtained using these threshold values was comparable to the optimal values used in the experiments. They also determined that increasing the size of the voxels from 1 meter to 1.25 meters degraded the accuracy.

The findings of the study highlight the utility of using multiple metrics for accurately estimating important regions. "Our research has the potential to significantly enhance the safety and efficiency of autonomous driving systems. For delivery robots, identifying spots with high collision or heavy congestion risks will enable the planning of efficient delivery routes, reducing times and costs. This can also help address the labor shortages in the logistics industry," Azuma concludes.

Overall, this innovative method promises to make smart cities safer and more efficient.

More information: Kenta Azuma et al, Estimation of Spatial Features in 3-D-Sensor Network Using Multiple LiDARs for Indoor Monitoring, IEEE Sensors Journal (2023). DOI: 10.1109/JSEN.2023.3247302

Citation: Enhancing smart cities: New method for accurate 3D sensor network monitoring (2024, August 6) retrieved 6 August 2024 from https://techxplore.com/news/2024-08-smart-cities-method-accurate-3d.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.