Apple is in the best position to make a difference in the public perception of Artificial Intelligence and how it's used at home, on mobile, and everywhere — and the regular folks that routinely peddle false narratives about the company are already taking poorly-aimed potshots at the company and the effort like they always have.

So far, 2024 has already seen the splashy debut launch of Apple Vision Pro and a quieter refresh of Macs and iPads using Apple's latest M3 chips. And, in January, Apple CEO Tim Cook made it clear that the company will debut something big with generative AI in 2024, likely at the 2024 WWDC.

That's not a bad first six months for a company that's ostensibly just "the smoke and mirrors of marketing" without any ability to innovate, assuming that you listen to the folks that have been routinely wrong about everything Apple for the last several decades.

Can't innovate? My ads!

For the last decade and a half of iPhone, Apple has indisputably been the driving force in smartphone designs. Each year Apple defines what the next year of Androids will look like while failing to deliver whatever short term fad its handset competitors have tried the year before.

Apple debuted its capacitive multitouch iPhone in a world full of keypads and slide out keyboards that tech critics were lathered up about, but which soon vanished from the market because critics were uniformly wrong about what customers would want.

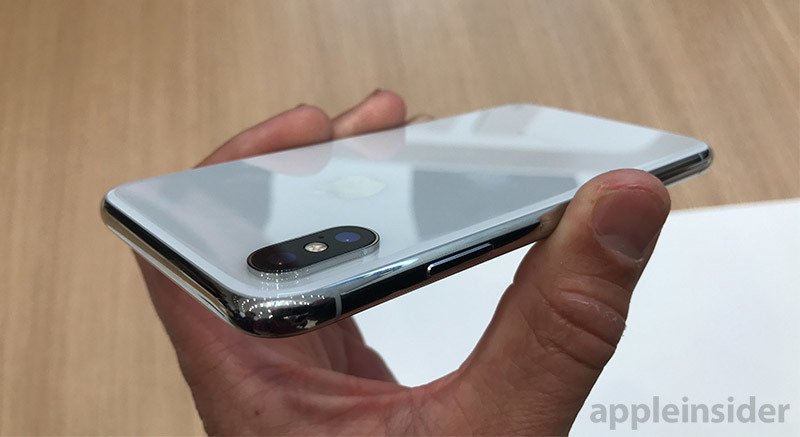

After every Android ended up looking just like an iPhone, Apple launched its slim-bezeled iPhone X with its awful, hideous "notch" that everyone immediately stopped laughing about and got to work duplicating. Bloomberg was taking the lead in insisting it was obvious nobody would pay an absurd $1,000.00 for a phone.

That take aged like milk.

Samsung's existing Edge-curved screens disappeared as quickly as they'd arrived, and the technorati stopped clamoring about "when Apple could be expected to launch its own curved-edge screen iPhone." Instead, those same folks pretended to be excited about an Android tablet that folded into a super thick Galaxy phone for the affordable price of just $2k if you don't count repairs.

Again, Bloomberg was taking the lead in cheerleading a false anti-Apple narrative.

Then, Google and minions showed off their tiny "pinhole" cameras that barely took up any notch space at all. At about the same time, Apple launched the Hail Mary of its Dynamic Island on iPhone 14 Pro.

In that Dynamic Island, the Notch instead became a prominent, expanding blackness that integrated notifications and app status with the cameras and sensor hardware in a way that was so genius, desirably sophisticated, and off the well-worn lemming trajectory charted by everyone else that Android's innovators all had to rush back to their drawing boards to perform a quick copy and paste operation.

I could rattle off the same story about the historical trends in smartwatches tacked to Apple Watch, or how TV boxes have largely navigated the seas of smart features using Apple TV at their North Star, or how the tablets that weren't iPads all just sank, or how AirPods took the wind out of competitor's sails.

But, I already have and you've probably already read them. Nobody that reads AppleInsider regularly needs to be convinced that any of this is a controversial concept.

Is it too late to say "that ship sailed?"

The critical gulf between PCs vs EVs

I'm always surprised at how the tech bloggers who style themselves as consumer technology aficionados always castigate Apple for leading the way despite somehow being unable to innovate. At the same time, they'll cheerlead for the teams that all wear the same uniforms because there really united in playing for advertisers, not the viewers.

Are they fans of the sport or do they just hate the home team because it's Apple? Shouldn't critics remain skeptical even when they're being monetized by the same source of surveillance advertising that drives Apple's competitors?

Other industries aren't like that. Among automakers, for example, car magazine writers don't just pick the one company with the most desirable cars to complain about and give a free pass to all the companies copying their designs and strategies.

Quite the opposite. Back when Tesla shook up the car market with its splashy high end electrics with gull wing doors and proprietary hardware supercharging that actually "just worked," and tethered them to a walled garden of conveniently placed EV stations to facilitate the very kind of long distance trips that were holding back EVs from reaching critical mass adoption, the car critics were on the edge of their seats applauding.

Car enthusiast content creators were not desperately trying to coin some sort of disparaging names for Tesla's signature features, or organizing pitchfork mobs to demand the EU force Tesla's superchargers to implement existing — albeit problematic — universal charging plugs and protocols, and they sure weren't telling their readers not to consider a Tesla until it implemented de facto industry standards like CarPlay.

There was barely a finger wagging even after Tesla's wildly expensive Full Self Driving car feature it misleadingly calls Autopilot failed to ever actually materialize in a form anything close to what it promised to be, even many years after Elon Musk assured consumers that if you bought a Tesla, it would pay for itself by moonlighting as a robot Uber driver.

It turns out all these years later, even the Tesla's very limited-duty Summon feature can't actually be expected to pull itself into a parking space for you, let alone navigate an underground garage stall or even a mall parking lot.

Apple would have been roasted for delivering a car that claimed to be fully self driving but really couldn't. Apple is still being roasted for deciding not to deliver a self driving car that couldn't, that will still deliver benefits to the company for a long time.

Having owned a Tesla for a few years now, I can report that its backup cameras are as laggy as an Android phone of 2010, and even its automatic windshield wipers that are supposed to "just work" when it's raining perform less impressively than cars that had that feature a very long time ago.

Last winter, Tesla drivers had to be stranded by frozen batteries that couldn't warm themselves up automatically (even though they technically can if the software had been written to do this), and Tesla's CEO had to come out as a deranged fascist tweeting out Blood and Soil-style genocide whataboutism before it ever became unpopular to worship the man as, ostensibly, the Steve Jobs who founded electric cars.

And that's the case even though Musk didn't even create Tesla, he acquired it and insisted they retroactively name him a founder because he had money. Jobs founded Apple twice, once on the opposite end of IBM's dominance of PCs and again under the unquestioned PC monopoly of Microsoft.

Unlike Musk, Jobs didn't promise fire and just belch out hot air.

Every move made by Jobs up to the moment of his death was painstakingly questioned and every product launch was brutally derided as flawed and incomplete. After he died, Apple's successive moves were compared against what Jobs supposedly would have done, perfectly, because suddenly the man was worshiped by those that would before would just as soon seen him roasting on a spit, now claiming that he had never made a mistake.

Apple was reviled under Jobs leadership, and then reviled because he was gone. All through its history, Apple couldn't ever catch a break, whether as the beleaguered underdog or as the industry leader. It was never about Jobs or about market position.

I've never come across a company like Apple, so begrudged by needling critics and grumpy pundits and industry analysts seeking to belittle its accomplishments and denigrate its lofty-self imposed standards and dismiss its quietly generous and humanistic corporate culture.

While else everyone copied its Mac desktop, its iPod, and its phones, tablets and watches, Apple had to strong-arm the rest of the industry into following its leadership in universal accessibility, hardware recycling, environmental issues, worker rights, workplace conditions, and customer security and privacy.

Apple is like the Star Trek Federation in a universe of Romulan treachery and Borg monotony. Tech critics and foreign governments are demanding Apple put down its phasers and surrender unfettered use its platforms for free and deliver its most attractive features for Android, because resistance is futile and why should the good guys win every time consumers vote with their dollars?

Critics don't lead, they follow behind muttering their discontent

Unlike the world of cars or fashion or anything else where bold innovation and striking creativity is applauded, in the world of consumer technology virtually the only thing bloggers and newspaper journalists get really upset about is Thinking Different.

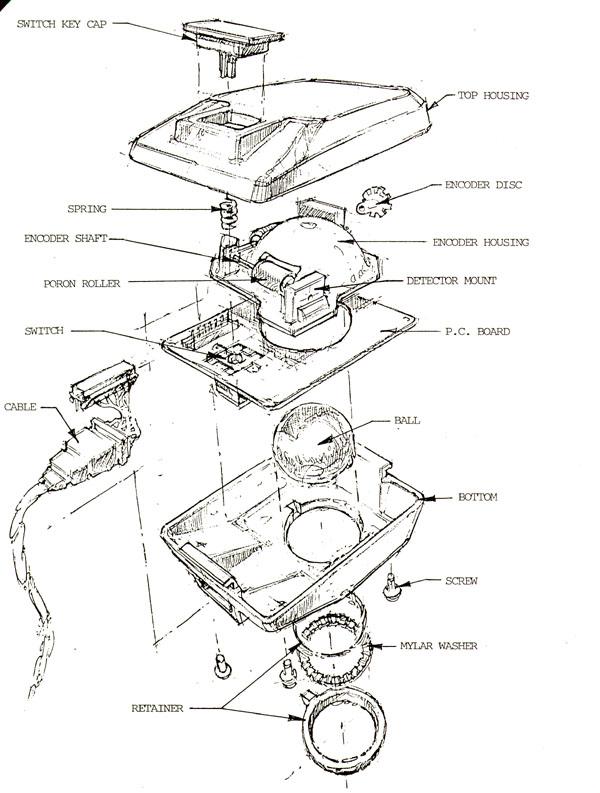

The most notorious critic of the original Macintosh was the prolific and overly-argumentative John Dvorak. He greeted the Mac with disdain for its mouse and graphical desktop, writing in the San Francisco Examiner in 1984.

"The nature of the personal computer is simply not fully understood by companies like Apple (or anyone else for that matter). Apple makes the arrogant assumption of thinking that it knows what you want and need. It, unfortunately, leaves the why' out of the equation — as in why would I want this?' The Macintosh uses an experimental pointing device called a mouse'. There is no evidence that people want to use these things."

Apple's mouse and its related developments would subsequently go on to dominate computing for the next two decades. In fact, 23 years later, a mouse would empower Dvorak to publish another opinion, this time advising Apple to "pull the plug on the iPhone," because "there is no likelihood that Apple can be successful in a business this competitive."

A lot of bloggerati were cautiously excited about iPhone. They were also very worried that it didn't have Nokia's bulletproof rubber shell and its replaceable batteries, or compatibility with JavaME and Flash, or the tiny keyboards that Jobs announced smartphones shouldn't really require, because it was so much more powerful to have a big bitmapped screen instead.

I spent many years defending Apple's choices for iPhones, back when most people thought I was wrong. But I was even more controversial in seeing the value in iPad, which so many tech critics were even more united in their contempt over.

Apple's iPad launch was famously dismissed as being "just a big iPod touch" while critics lauded Google's plans to beat iPad, first with Android 3.0 Honeycomb, then Android 5.0 Lollypop, as well as its own Nexus 7, Pixel C tablet and the Chromebook Pixel.

Critics were largely optimistic and cheerleading for Android and Chrome OS to somehow shake up tablets or at least dethrone iPad. Even their acknowledgment of Google's flaws were largely worded as hopeful or perhaps faded excitement that Android's tablet-optimized apps would ever appear as expected.

Bloomberg provided disproportionate, informercial-like coverage of Google's Pixel products without any concern for how well they were selling

Bloomberg, the Wall Street Journal and Japan's Nikki all were so consistently wrong about Apple and its iPad, while their same critics were so hopeful about Google's Android and Pixel tablets that I observed at the time:

"If someone gives you the wrong directions once, you can forgive it as a mistake. But if they chart out a detailed series of turns that are all totally the wrong way, it begins to look like they don't really want you to arrive at your intended destination."

Criticism of the future

When Apple introduced development tools supporting on-device machine learning for organizing your collections of photos and identifying objects in images and text, critics largely yawned. When machine learning was recently rebranded as "artificial intelligence" outside of Apple, they got super excited.

They demanded to know when Apple would enter the AI race? Is Apple hopelessly behind in rushing out a tool to scrape up everyone's existing work and create derivative content from it, or is that more of a Microsoft/Meta/Google thing?

There's a lot of new things Apple could use AI to power. While some tech writers are expressing actual criticism of AI taking over, the scarier part of that would be who is handling the "taking over" part, and with whom their interests would align: customers or advertisers?

Imagine a smart home with AI to control how things should occur in the management of your home. Perhaps turning on lights based on how you have been turning them on yourself, such when it senses you've entered the room, or perhaps related to how you have previous turned on your outside lights at night in the past.

Should this be watched and monitored on remote cloud servers that also host the ads you see, based on what websites you load or which social media posts you see? Or would this be better done on a HomePod or Apple TV you can control?

Would you trust VR eye tracking or environmental room mapping in your home to an ad company that has been collecting your Likes and views and selling that to its advertisers? Or with a company that expressly blocks third party apps from accessing that information unless you expressly opt-in to enable it?

Apple is in a position to sell AI as a feature for hardware devices, and to perform that intelligence securely and privately on those devices, which you have the power to control.

Would you trust such intimate knowledge of virtually every move you make to be done remotely by a company who's trying to sell you things— or more accurately, to sell you to its advertiser customers?

Or do you want this to be handled by somebody who's creating your experience especially for you, and just you?

It will be interesting to see how critics spin their outlooks as WWDC approaches and Apple further outlines where it plans to take machine learning and generative AI.