Deep learning techniques have become increasingly advanced over the past few years, reaching human-level accuracy on a wide range of tasks, including image classification and natural language processing.

The widespread use of these computational techniques has fueled research aimed at developing new hardware solutions that can meet their substantial computational demands.

To run deep neural networks, some researchers have been developing so-called hardware accelerators, specialized computing devices that can be programmed to tackle specific computational tasks more efficiently than conventional central processing units (CPUs).

The design of these accelerators has so far been primarily performed separately from the training and execution of deep learning models, with only a few teams tackling these two research goals in tandem.

Researchers at University of Manchester and Pragmatic Semiconductor recently set out to develop a machine learning-based method to automatically generate classification circuits from tabular data, which is unstructured data combining numerical and categorical information.

Their proposed method, outlined in a paper published in Nature Electronics, relies on a newly introduced methodology that the team refers to as "tiny classifiers."

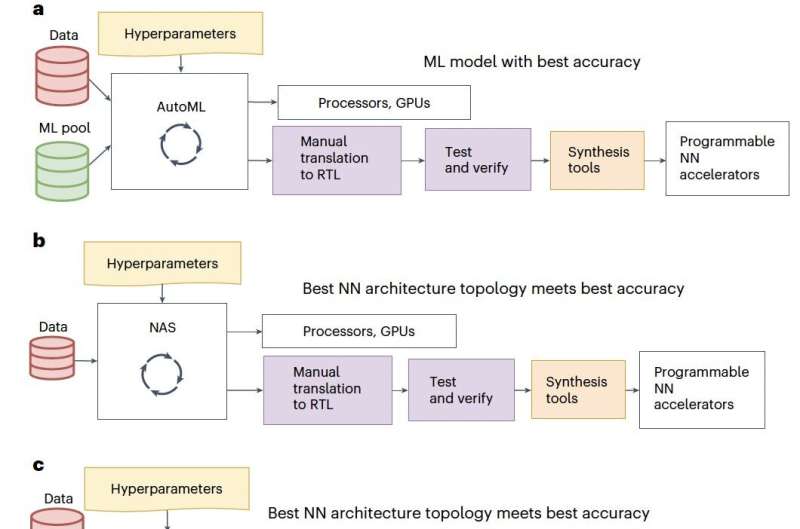

"A typical machine learning development cycle maximizes performance during model training and then minimizes the memory and area footprint of the trained model for deployment on processing cores, graphics processing units, microcontrollers or custom hardware accelerators," Konstantinos Iordanou, Timothy Atkinson and their colleagues wrote in their paper.

"However, this becomes increasingly difficult as machine learning models grow larger and more complex. We report a methodology for automatically generating predictor circuits for the classification of tabular data."

The tiny classifier circuits developed by Iordanou, Atkinson and their colleagues are comprised of merely a few hundred logic gates. Despite their relatively small size, they were found to enable similar accuracies to those achieved by state-of-the-art machine learning classifiers.

"The approach offers comparable prediction performance to conventional machine learning techniques as substantially fewer hardware resources and power are used," Iordanou, Atkinson and their colleagues wrote.

"We use an evolutionary algorithm to search over the space of logic gates and automatically generate a classifier circuit with maximized training prediction accuracy, which consists of no more than 300 logic gates."

The researchers tested their tiny classifier circuits in a series of simulations and found that they achieved highly promising results, both in terms of accuracy and power consumption. They then also validated their performance on a real, low-cost integrated circuit (IC).

"When simulated as a silicon chip, our tiny classifiers use 8–18 times less area and 4–8 times less power than the best-performing machine learning baseline," Iordanou, Atkinson and their colleagues wrote.

"When implemented as a low-cost chip on a flexible substrate, they occupy 10–75 times less area, consume 13–75 times less power and have 6 times better yield than the most hardware-efficient ML baseline."

In the future, the tiny classifiers developed by the researchers could be used to efficiently tackle a wide range of real-world tasks. For instance, they could serve as triggering circuits on a chip, for the smart packaging and monitoring of various goods, and for the development of low-cost near-sensor computing systems.

More information: Konstantinos Iordanou et al, Low-cost and efficient prediction hardware for tabular data using tiny classifier circuits, Nature Electronics (2024). DOI: 10.1038/s41928-024-01157-5

© 2024 Science X Network

Citation: A method to generate predictor circuits for the classification of tabular data (2024, May 23) retrieved 3 June 2024 from https://techxplore.com/news/2024-05-method-generate-predictor-circuits-classification.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.