Optimization algorithms are pivotal in machine learning and artificial intelligence (AI) in general. For a long time, it has been widely believed that the design/configuration of optimization algorithms is a task that heavily relies on human intelligence and requires customized design for specific problems.

However, with the increasing demand for AI and the emergence of new and complex problems, the manual design paradigm is facing significant challenges. If machines can automatically or semi-automatically design optimization algorithms in some way, it will not only greatly alleviate these challenges but also substantially expand the horizons of AI.

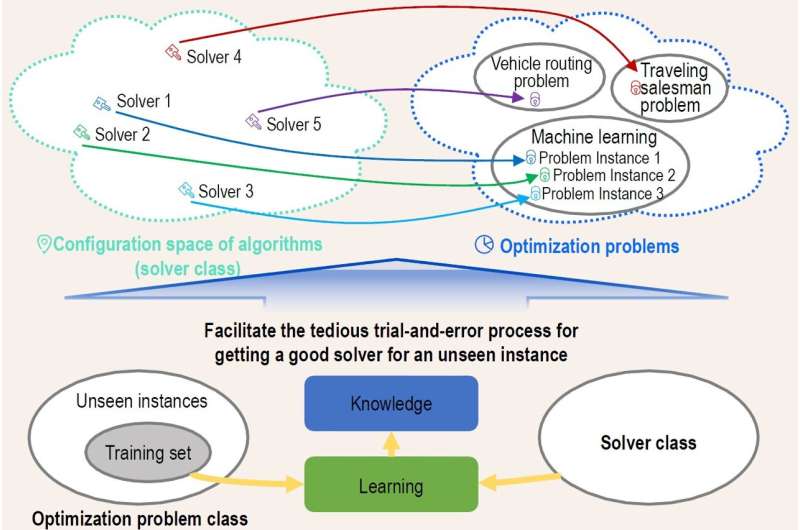

In recent years, researchers have been exploring ways to automate the algorithm configuration and design process by learning from a set of training problem instances. These efforts, referred to as Learn to Optimize (L2O), utilize a large number of optimization problem instances as input and attempt to train optimization algorithms within a configuration space (or even code space) with generalization ability.

Results across fields such as SAT, machine learning, computer vision, and adversarial example generation have shown that the automatically/semi-automatically designed optimization algorithms can perform comparably to, or even outperform, manually designed ones. This suggests that the field of optimization algorithm design may have entered the dawn of "machine replacing human."

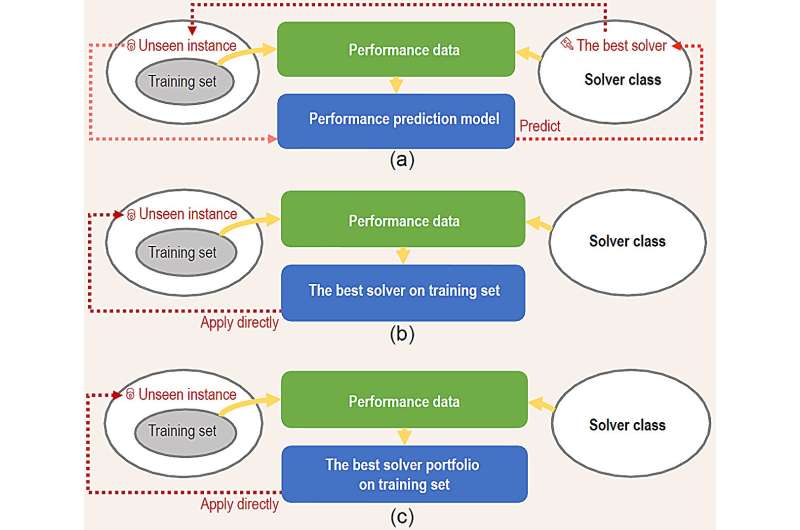

The article reviews three main approaches for L2O: training performance prediction models, training a single solver, and training a portfolio of solvers. It also discusses theoretical guarantees for the training process, successful application cases, and the generalization issues of L2O. Finally, this article points to promising future research directions.

The study is published in the journal National Science Review.

"L2O is expected to grow into a critical technology that relieves increasingly unaffordable human labor in AI." Tang says. However, he also points out that warranting reasonable generalization remains a challenge for L2O, especially when dealing with complex problem classes and solver classes.

"A second-stage fine-tuning might be necessary in many real-world scenarios," Tang suggests. "The learned solver(s) could be viewed as foundation models for further fine-tuning."

He believes that building a synergy between the training and fine-tuning of foundation models would be a critical direction for fully delivering the potential of L2O in future development.

More information: Ke Tang et al, Learn to optimize—a brief overview, National Science Review (2024). DOI: 10.1093/nsr/nwae132

Citation: The future of optimization: How 'Learn to Optimize' is reshaping algorithm design and configuration (2024, May 15) retrieved 15 May 2024 from https://techxplore.com/news/2024-05-future-optimization-optimize-reshaping-algorithm.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.