The human brain, with its remarkable general intelligence and exceptional efficiency in power consumption, serves as a constant inspiration and aspiration for the field of artificial intelligence. Drawing insights from the brain's fundamental structure and information processing mechanisms, brain-inspired computing has emerged as a new computational paradigm, poised to steer artificial intelligence from specialized domains towards broader applications in general intelligence.

The International Semiconductor Association has recognized brain-inspired computing as one of the two most promising disruptive computing technologies in the post-Moore's Law era.

As an interdisciplinary field involving chips, software, algorithms, models, and more, the concept and research paradigm of brain-inspired computing is continuously expanding and deepening. Notably, the Tianjic chip, released by Tsinghua University's Center for Brain-inspired Computing Research in 2019, marked a significant milestone in the field of brain-inspired computing.

This chip not only supports computer science-oriented and neuroscience-oriented models but also enables their hybrid modeling, providing strong support for the emerging paradigm of dual-brain-driven heterogeneous brain-inspired computing. This breakthrough has further stimulated the vigorous development and innovation of Hybrid Neural Networks (HNNs).

Recently, a comprehensive review of HNNs was published in the National Science Review, thanks to a collaboration between Professor Rong Zhao's team and Professor Luping Shi's team from Tsinghua University. The review provides a systematic overview of HNNs, covering their origins, concepts, construction frameworks, and supporting systems while also highlighting their development trajectory and future directions.

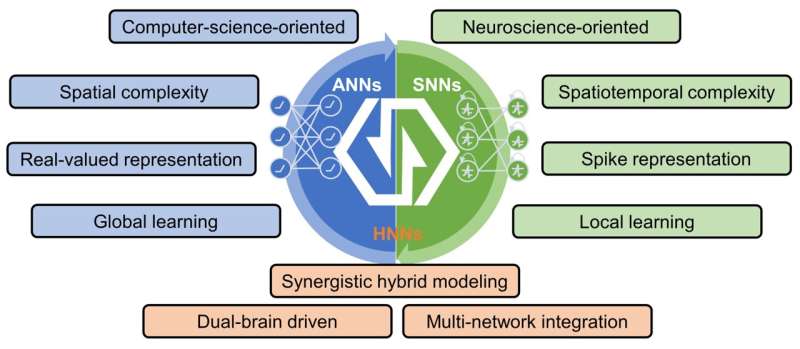

As a representative research paradigm driven by dual-brain principles, HNN seamlessly combines the neuroscience-oriented Spiking Neural Networks (SNNs) and the computer science-oriented Artificial Neural Networks (ANNs). By harnessing the unique advantages of these heterogeneous networks in terms of information representation and processing, HNN injects new vitality into the development of Artificial General Intelligence (AGI).

The heterogeneity inherent in ANNs and SNNs within HNNs provides them with extensive flexibility and diversity. However, this heterogeneity also poses significant challenges in their construction. To effectively promote the development of HNNs, a systematic approach covering various perspectives is imperative, including integration paradigms, fundamental theories, information flow, interaction modes, and network structures.

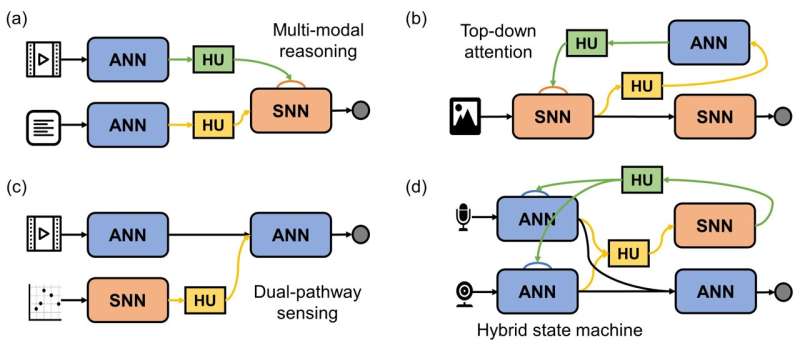

The collaborative team has pioneered a general design and computation framework for HNNs that addresses these challenges. They have employed an innovative approach of decoupling followed by integration, using parameterized Hybrid Units (HUs), which successfully overcome the connectivity issues between heterogeneous neural networks. The integration strategy combines the unique characteristics of various heterogeneous computing paradigms while improving flexibility and construction efficiency through decoupling.

By adopting this novel framework, HNNs can benefit from the strengths of both ANNs and SNNs while mitigating their limitations. This approach not only enhances the performance and capabilities of HNNs but also provides a foundation for further research and advancements in the field of brain-inspired computing.

By considering diverse design dimensions, flexible and diverse HNN models can be constructed. These models can leverage the heterogeneity of data and supporting systems to achieve a better balance between performance and cost.

Currently, HNNs have been widely applied in intelligent tasks such as target tracking, speech recognition, continual learning, decision control, etc., providing innovative solutions for these domains. Furthermore, inspired by the heterogeneity of the brain, HNN can also serve as a modeling tool for neuroscience research, facilitating the collaborative development of neuroscience and HNNs.

This synergy opens up vast research space and opportunities for both fields to advance together. Through continued research and exploration, HNN is expected to contribute further to the development of AGI and our understanding of the brain's complex mechanisms.

To efficiently deploy and apply HNNs, the development of suitable supporting systems is crucial. Currently, several supporting infrastructures have been developed, including chips, software, and systems. In terms of chip design, Tianjic, a hybrid brain-inspired chip, has been comprehensively optimized for seamless integration of HNNs. This chip provides enhanced performance and efficiency specifically tailored for HNN applications.

On the software side, neuromorphic completeness provides theoretical support and framework design guidance for the compilation and deployment of HNNs. This ensures that the software systems can effectively handle the unique characteristics and computational requirements of HNN models.

Furthermore, the Jingwei-2 brain-inspired computing system has optimized the computing, storage, and communication infrastructure at the cluster level. This system lays a solid foundation for the development of large-scale HNNs by providing the necessary resources to handle the computational demands of complex neural networks.

Looking ahead, the in-depth research on large-scale HNN holds great significance. For instance, currently, in the field of deep learning, Transformer-based models with billions or even trillions of parameters have made significant progress in natural language processing and image understanding. However, these advancements come with a significant energy cost.

At the recent ISSCC, a conference on chip design, it was reported that using HNNs, hybrid Transformer models were successfully constructed, leading to a substantial reduction in energy consumption. This highlights the potential of HNNs in addressing the energy efficiency challenges associated with large-scale models.

Exploring the design and optimization methods of large-scale HNNs, as well as constructing training datasets, will be important research directions in the future. These areas offer high research value and broad application prospects, particularly in mitigating energy consumption while maintaining or even improving the performance of complex models.

More information: Faqiang Liu et al, Advancing brain-inspired computing with hybrid neural networks, National Science Review (2024). DOI: 10.1093/nsr/nwae066

Citation: Advancing brain-inspired computing with hybrid neural networks (2024, April 10) retrieved 10 April 2024 from https://techxplore.com/news/2024-04-advancing-brain-hybrid-neural-networks.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.