A team of robotics engineers at Robotic Systems Lab, in Switzerland, has developed a hybrid control architecture that combines the advantages of current quadruped robot control systems to give four-legged robots better walking capabilities on rough terrain.

For their project, reported in the journal Science Robots, the group combined parts of two currently used technologies to improve quadruped agility.

As the research team notes, there are two main methods currently used by robot makers to allow four-legged robots to walk around on rough terrain. The first is called trajectory optimization with inverse dynamics; the second uses simulation-based reinforcement learning.

The first approach is model-based, and while it offers a host of advantages, such as allowing the robot to learn and thus gain planning abilities, it also suffers from mismatches between what has been learned and real-world conditions.

The second approach is robust, especially regarding recovery skills, but is weak regarding applying rewards from environments that are extra-challenging, such as conditions with few "safe" footholds.

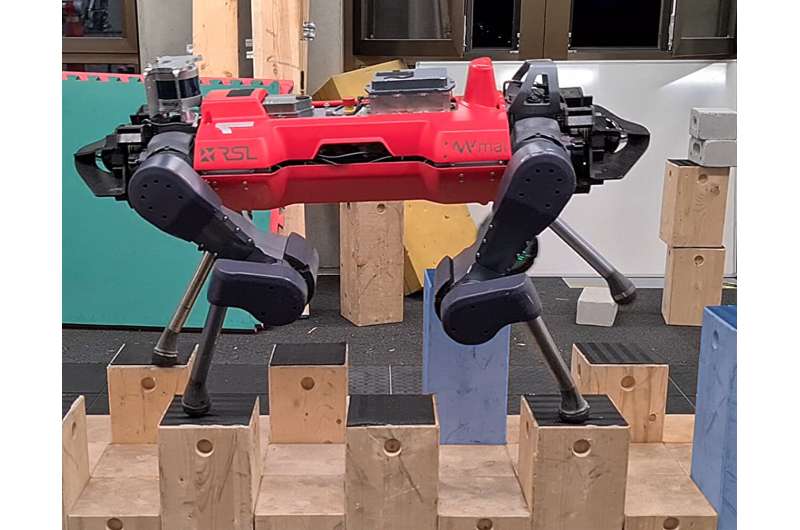

For this new study, the research team attempted to overcome some of the problems encountered with the other approaches while at the same time implementing the features that work well. The result is what the research team refers to as a pipeline (control framework) that they call Deep Tracking Control, and they implemented it in a robot they call ANYMal.

The researchers have been working on their ideas for several years with a variety of partners—in 2019, for example, they worked with Intelligent Systems Lab to find a way to use machine learning technique to make a canine-like robot more agile and faster. And two years ago, they were in the process of teaching their robot to learn to hike.

Developing the DTC was a four-step process: Identifying parameters and estimating uncertainties, training the actuator net to model software dynamics, controlling policy using the models created, and deploying onto a physical system. As part of the physical implementation, the DTC was trained on data from 4,000 virtual robot simulations covering a wide variety of terrain elements over an area representing 76,000 square meters

Testing of ANYMal showed that its ability to optimize trajectories with reinforcements allowed it to better position its legs in variable terrain conditions, which in turn allowed for finding the best possible footholds, given those that were available. It also allowed for better fall recovery. Together these capabilities allowed the robot to traverse difficult landscapes with fewer failures than other robots.

More information: Fabian Jenelten et al, DTC: Deep Tracking Control, Science Robotics (2024). DOI: 10.1126/scirobotics.adh5401

© 2024 Science X Network

Citation: A hybrid control architecture that combines advantages of current quadruped robot controls (2024, January 18) retrieved 18 January 2024 from https://techxplore.com/news/2024-01-hybrid-architecture-combines-advantages-current.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.