Advertising content generated by artificial intelligence (AI) is perceived as being of higher quality than content produced by human experts, according to a new research paper in Judgment and Decision Making.

In the first study of its kind, the findings challenge the view that knowing a piece of content is generated with AI involvement lowers the perceived quality of content—known as algorithm aversion. ChatGPT4 outperforms human experts in generating advertising content for products and persuasive content for campaigns.

The research, conducted by academics at Massachusetts Institute of Technology and the University of California Berkeley, involved enlisting professional content creators and ChatGPT-4 to create advertising content for products and persuasive content for campaigns.

The content used for this research advertised content for retail products such as air fryers, projectors, and electric bikes, and persuasive content for social campaigns such as starting recycling and eating less junk food.

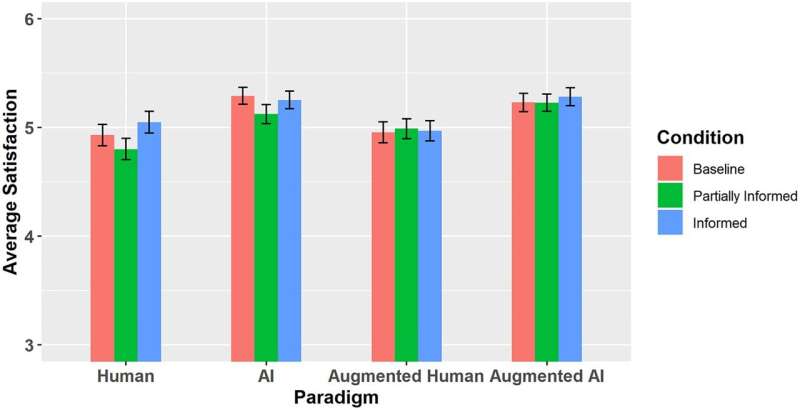

The content creation used four models for Human-AI collaboration: human only, AI only (ChatGPT-4), augmented human, (where a human makes the final decision with AI output as a reference), and augmented AI (where the AI makes the final decision with human output as a reference).

The research data analyzed three levels of knowledge; completely ignorant of the context, uninformed (partial knowledge with no knowledge of which context is which), and informed (full knowledge of context).

Content generated when AI made the sole or final decision on the output resulted in higher satisfaction levels compared to content generated when a human expert made the sole or final decision on the output.

The research also found that revealing the source of content production reduces, but does not reverse, the perceived quality gap between human- and AI-generated content.

In analyzing their findings, the researchers deemed that bias in evaluation is predominantly driven by human favoritism rather than AI aversion. Knowing the same content is created by a human expert increases its reported perceived quality, but knowing that AI is involved in the creation process does not affect its perceived quality.

Nevertheless, the willingness to pay for content generated when AI made the sole or final decision on the output was still slightly higher than that for content generated by human experts or augmented human experts.

Human oversight

Author Yunhao Zhang, of the Massachusetts Institute of Technology and the University of California Berkeley, noted the importance of evaluating this paper's findings in context.

"Although our research indicates that content produced by AI can be compelling and persuasive, we are not suggesting that AI should completely displace human workers or human oversight.

"In our research's contexts, we carefully selected harmless products and campaigns. However, human oversight is still needed to ensure the content produced by AI is appropriate in more sensitive contexts, and that inappropriate or dangerous content is never distributed."

Co-author Renée Gosline, of the Massachusetts Institute of Technology, commented that although large language models (LLMs) can generate quality content at scale, this may not be true for all purposes and humans still have an important role to play. Ideally, humans and AI would be complementary in the creation of high-quality content.

"Our results show that AI can be beneficial by scaling high-quality content. In the context of our study, it took ChatGPT-4 a matter of seconds to produce content on par with or of higher quality than that of the human experts. But it is also clear that the market values human input.

"To our knowledge, our research is the first to compare people's perceptions of persuasive content generated by industry professionals, LLMs, and their collaboration, as well as measure people's biases toward content generated by human experts. Research like this can help better illuminate the ways people think about AI, which is critical for understanding its adoption, bias proliferation, and how we can design human-first AI tools."

More information: Yunhao Zhang et al, Human favoritism, not AI aversion: People's perceptions (and bias) toward generative AI, human experts, and human–GAI collaboration in persuasive content generation, Judgment and Decision Making (2023). DOI: 10.1017/jdm.2023.37

Citation: Algorithm appreciation overcomes algorithm aversion, advertising content study finds (2023, November 28) retrieved 28 November 2023 from https://techxplore.com/news/2023-11-algorithm-aversion-advertising-content.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.