A trio of computer scientists at Auburn University, in the U.S., working with a colleague from the University of Alberta, in Canada, has found that claims of visual skills by large language models (LLMs) with vision capabilities (VLMs) may be overstating abilities.

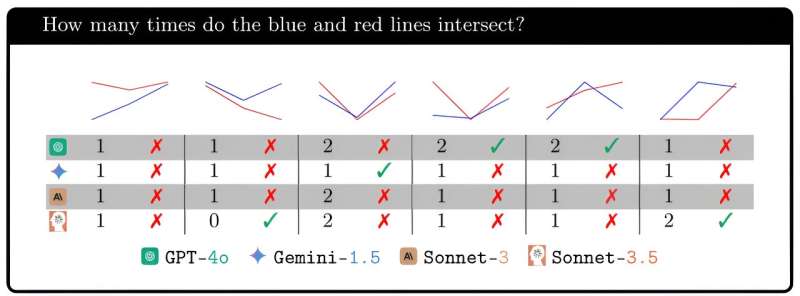

Pooyan Rahmanzadehgervi, Logan Bolton, Anh Totti Nguyen and Mohammad Reza Taesiri have tested four of the most popular VLMs (GPT-4o, Gemini-1.5 Pro, Claude-3 Sonnet, and Claude-3.5 Sonnet) on their visual abilities. The research is posted to the arXiv preprint server.

As large language models have evolved over the past year, new features have been added, such as the ability to accept visual input. But such abilities have led to questions regarding the nature of visual ability in general.

As with animals, any human-built visual system must have two main components, a camera and a brain to process what is captured by the camera. In this new study, the researchers have found that while the camera that is used to capture visualization may be highly developed, the processing of the data that it produces is still in its early stages.

It is one thing to ask a language model to identify a building such as the Taj Mahal, quite another to ask it questions about the nature of things that are in the image. As an example, asking the language model to tell you how many children standing in front of the Taj Mahal are holding hands, is tricky because the language model has not been taught to count—it has been taught to recognize things like hand-holding.

Thus, unless it has been shown images of the same number of children holding hands as shown in the picture, it will have no way of giving a correct answer.

The researchers have demonstrated this lack of processing ability by asking four popular LLMs to do things that are very simple for people to do, like count how many circles in a picture are overlapping or how many rings are interconnected.

Unsurprisingly, all four of the LLMs performed poorly—they only did well when they had been trained with pictures showing something familiar. They had difficulty figuring out how many rings were interlocking when there were more than five of them, for example, because other than the Olympic rings, they had not seen such examples.

The work by the team on this effort shows that large language models have a long way to go before they are capable of processing visual information in ways that are on par with humans.

More information: Pooyan Rahmanzadehgervi et al, Vision language models are blind, arXiv (2024). DOI: 10.48550/arxiv.2407.06581

Vision language models are blind: vlmsareblind.github.io/

Journal information: arXiv

© 2024 Science X Network

Citation: Visual abilities of language models found to be lacking depth (2024, July 12) retrieved 12 July 2024 from https://techxplore.com/news/2024-07-visual-abilities-language-lacking-depth.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.