A new way to teach artificial intelligence (AI) to understand human line drawings—even from non-artists—has been developed by a team from the University of Surrey and Stanford University.

The new model approaches human levels of performance in recognizing scene sketches.

Dr. Yulia Gryaditskaya, Lecturer at Surrey's Center for Vision, Speech and Signal Processing (CVSSP) and Surrey Institute for People-Centered AI (PAI), said, "Sketching is a powerful language of visual communication. It is sometimes even more expressive and flexible than spoken language.

"Developing tools for understanding sketches is a step towards more powerful human-computer interaction and more efficient design workflows. Examples include being able to search for or create images by sketching something."

People of all ages and backgrounds use drawings to explore new ideas and communicate. Yet, AI systems have historically struggled to understand sketches.

AI has to be taught how to understand images. Usually, this involves a labor-intensive process of collecting labels for every pixel in the image. The AI then learns from these labels.

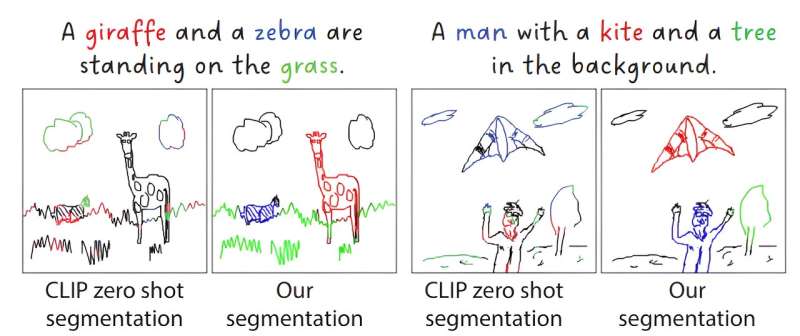

Instead, the team taught the AI using a combination of sketches and written descriptions. It learned to group pixels, matching them against one of the categories in a description.

The resulting AI displayed a much richer and more human-like understanding of these drawings than previous approaches. It correctly identified and labeled kites, trees, giraffes and other objects with an 85% accuracy. This outperformed other models that relied on labeled pixels.

As well as identifying objects in a complex scene, it could identify which pen strokes were intended to depict each object. The new method works well with informal sketches drawn by non-artists, as well as drawings of objects it was not explicitly trained on.

Professor Judith Fan, Assistant Professor of Psychology at Stanford University, said, "Drawing and writing are among the most quintessentially human activities and have long been useful for capturing people's observations and ideas.

"This work represents exciting progress towards AI systems that understand the essence of the ideas people are trying to get across, regardless of whether they are using pictures or text."

The research forms part of Surrey's Institute for People-Centered AI, and in particular its SketchX program. Using AI, SketchX seeks to understand the way we see the world by the way we draw it.

Professor Yi-Zhe Song, Co-director of the Institute for People-Centered AI, and SketchX lead, said, "This research is a prime example of how AI can enhance fundamental human activities like sketching. By understanding rough drawings with near-human accuracy, this technology has immense potential to empower people's natural creativity, regardless of artistic ability."

The study is posted to the arXiv preprint server, and the paper will be presented at the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2024), held in Seattle June 17–21, 2024.

More information: Ahmed Bourouis et al, Open Vocabulary Semantic Scene Sketch Understanding, arXiv (2023). DOI: 10.48550/arxiv.2312.12463

Journal information: arXiv

Citation: Researchers teach AI to spot what you're sketching (2024, June 17) retrieved 17 June 2024 from https://techxplore.com/news/2024-06-ai-youre.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.